31 Jan Feature Selectivity in Vision

This post is another in the series on specialization, in which the author stresses the need for very heterogeneous models for imitating brain capabilities with computers.  An important discovery of neurophysiological and cybernetic research is that many neurons, particularly those in areas of the brain that specialize in processing perceptual data, are feature selective. Vision processing is a good example. Some neurons in the visual cortex respond specifically to color, some to light-dark contrast, and others to orientation of lines (Hubel & Wiesel, 1962, 1968; von der Malsburg, 1973). Selectivity to these types of features develops prior to birth in the striate cortex (Brodmann‘s area 17 or the “principal visual cortex”) and becomes tuned during the latter part of fetal development and early infancy (Hubel & Wiesel, 1979).

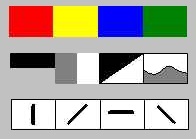

An important discovery of neurophysiological and cybernetic research is that many neurons, particularly those in areas of the brain that specialize in processing perceptual data, are feature selective. Vision processing is a good example. Some neurons in the visual cortex respond specifically to color, some to light-dark contrast, and others to orientation of lines (Hubel & Wiesel, 1962, 1968; von der Malsburg, 1973). Selectivity to these types of features develops prior to birth in the striate cortex (Brodmann‘s area 17 or the “principal visual cortex”) and becomes tuned during the latter part of fetal development and early infancy (Hubel & Wiesel, 1979).

With specialized cells, each noting one specific aspect of a stimulus (image or sound…), the brain can generate composite representations of objects similar to the pixels on video monitors. Because this image-interpretation system is linked to the rest of the brain, complex patterns of activation can arise out of recognition to any image pattern perceived more than once. The importance of this discovery is that it suggests an explicit model of knowledge coding.

- Color selective cells become activated in response to perceived colors.

- Contrast selective cells detect borders in images. The aggregate borders of an image tell its shapes.

- Orientation selective cells have been extensively researched in cats. You may have heard of cats that run into chair legs when they are raised in rooms with horizontal lines.

These specialized processing elements (cells) collaborate to divide and conquer, assembling the whole from the parts, and creating an input signal pattern that can be compared with known patterns in the brain.

| Understanding Context Cross-Reference |

|---|

| Click on these Links to other posts and glossary/bibliography references |

|

|

|

| Prior Post | Next Post |

| From Aristotle to the Enchanted Loom | Mapping a Thought |

| Definitions | References |

| perception stimulus | Hubel & Wiesel 1962 1968 |

| cybernetic pattern | Mesgarani and Chang on NPR |

| specialization response | Hubel & Wiesel 1979 |

| activation | von der Malsburg 1973 |

Scene Analysis

Scene Analysis

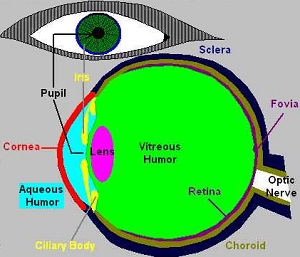

Feature selectivity permits the visual system to generate unique responses to each unique set of visual stimuli presented to the eyes. With feature-selective cells responding to the parameter images, a specific pattern of activation is created in the striate cortex. Wernicke’s area may respond the same to features of sounds. The scene is automatically analyzed or broken apart into a distributed representation of many activated feature-selective cells.

Since initial analysis occurs in massive parallelism across a single layer or a few tightly linked layers, the analysis is complete within a few milliseconds of the reception of a stimulus pattern. The pattern of activation created in area 17 at the extreme posterior of the brain will thus be distributed very quickly.

This distributed pattern of activation may be compared to a mosaic similar to bit-mapped representations common to computerized image analysis or pixels on a television screen. The resulting conceptual mosaic generated in other parts of the brain, however, is more like a multi-dimensional map of associated concepts and features in the image. An image of an object may be synthesized by combining splotches of color, discerned shapes, and lines into a composite mosaic.

Mesgarani and Chang have found that similar brain processes apply to perceived sounds of speech. Electrodes placed on the surface of the brain let researchers see precisely how neurons or clusters of neurons, responded as each bit of sound passed through. They observed some cells were responding specifically to plosives, like the initial puh-sounds in Peter Piper Picked a Peck of Pickled Peppers, while other cells were responding to specific vowel sounds. Chang says: “We were shocked to see the kind of selectivity. Those sets of neurons were highly responsive to particular speech sounds.” he went on to explain that “these sounds are what linguists call phonemic features, the most basic components of speech. There are about a dozen of these features. And they can be combined to make phonemes, the sounds that allow us to tell the difference between words like dad and words like bad.” (From NPR story and Journal of Nature)

Mesgarani and Chang have found that similar brain processes apply to perceived sounds of speech. Electrodes placed on the surface of the brain let researchers see precisely how neurons or clusters of neurons, responded as each bit of sound passed through. They observed some cells were responding specifically to plosives, like the initial puh-sounds in Peter Piper Picked a Peck of Pickled Peppers, while other cells were responding to specific vowel sounds. Chang says: “We were shocked to see the kind of selectivity. Those sets of neurons were highly responsive to particular speech sounds.” he went on to explain that “these sounds are what linguists call phonemic features, the most basic components of speech. There are about a dozen of these features. And they can be combined to make phonemes, the sounds that allow us to tell the difference between words like dad and words like bad.” (From NPR story and Journal of Nature)

Interpretation

As the pattern of activation spreads forward in the brain, a representation of objects in the scene is synthesized by a pattern of activation in the neurons of areas 18 and 19 – the association areas of the visual cortex. The process is called binding. Activations in the association area directly follow activations in the primary visual cortex and resolve within 20 to 40 milliseconds of the initial stimulus.

The patterns can be channeled through filters to find important objects or parts of objects in the scene. These patterns can then activate a chain reaction of recognition. Obviously, recognition is dependent on the link structure that has formed in the brain throughout the individual’s learning cycle.

The process of object extraction will be driven by more complex cognitive processes than feature extraction because object extraction implies some level of recognition. Recognition may require integration of information in two or more of the perceptual areas, such as visual and olfactory or audio and tactile information that can provide a multi-dimensional interpretation of objects. Recognition is discussed in more detail in my posts on perception and cognition.

The process of object extraction will be driven by more complex cognitive processes than feature extraction because object extraction implies some level of recognition. Recognition may require integration of information in two or more of the perceptual areas, such as visual and olfactory or audio and tactile information that can provide a multi-dimensional interpretation of objects. Recognition is discussed in more detail in my posts on perception and cognition.

The visual cortex has 3 layers: 19, 18 & 17. The binding process for images occurs in areas 18 and 19, probably resulting in immediate recognition of familiar images. Complex image recognition and interpretation take place in the frontal lobe and other parts of the cerebrum. Binding with other sensory and conceptual patterns, then, occurs in integrating components of the brain.

Can computers and devices like smart phones integrate inputs from multiple, heterogeneous components? Yes. But most artificial intelligence approaches have been based on a single, homogeneous strategy such as backward-chaining inference, case-based reasoning, neural networks or expert systems. As we approach the age of knowledge, really smart systems will need to integrate multiple specialized components to deliver the capabilities and outcomes that will be required once we graduate from the information age.Furthermore, the system that accurately interprets and understands your intent, based on the things you say, will need multiple, heterogeneous components bound together by context.

| Click below to look in each Understanding Context section |

|---|