15 Mar The Random Hamlet

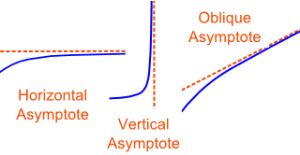

For building an automated language understanding and translation system, I believe that adding random factors to the model for “fuzzy” reasoning is needed and important. The questions are:

- How do we use random factors to improve the outcomes of the process?

- Where do we insert random factors into the model?

- How do we implement random factor generation?

- How random do the factors need to be?

Today’s post is strictly concerned with the last question because I have encountered divergent positions on the meaning of random.

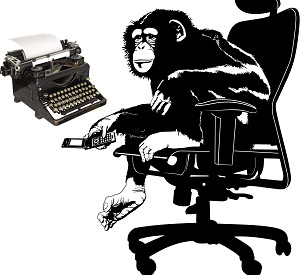

A common illustration of random probability theory based on the asymptotic model proposes that infinite random character generation will yield full-scale literary works of fact and fiction. The theory is expressed in terms of a monkey and William Shakespeare’s Hamlet: Given a monkey and a typewriter (or a million monkeys with typewriters), in the course of randomly typing forever, the monkey will eventually churn out the script of Hamlet verbatim. The monkey makes this seem less credible, so let’s give the theory a little beef: Given an infinitely powerful computer capable of generating characters perfectly randomly, over the span of eternity, eventually the computer will generate the script of Hamlet.

A common illustration of random probability theory based on the asymptotic model proposes that infinite random character generation will yield full-scale literary works of fact and fiction. The theory is expressed in terms of a monkey and William Shakespeare’s Hamlet: Given a monkey and a typewriter (or a million monkeys with typewriters), in the course of randomly typing forever, the monkey will eventually churn out the script of Hamlet verbatim. The monkey makes this seem less credible, so let’s give the theory a little beef: Given an infinitely powerful computer capable of generating characters perfectly randomly, over the span of eternity, eventually the computer will generate the script of Hamlet.

Of course, now that we’ve raised the ante by bringing in the big guns, shall we extend the theory to its logical conclusion? Since probability is presumed, in this model, to be asymptotic – always approaching but never reaching zero, why limit the machine to one good play? That same computer will obviously be able to randomly generate the entire body literature of human-kind, in chronological order, with intelligent footnotes. Granted, this is extending the theory ad nauseum, but the “anything is possible” assumption gives us all that and more if we just decide to take it and run with it.

| Understanding Context Cross-Reference |

|---|

| Click on these Links to other posts and glossary/bibliography references |

| Prior Post | Next Post |

| That's so Random! | Monkeys with Typewriters at the Threshold |

| Definitions | References |

| random probability | Adams 1982 Math is Fun |

| sample space modeling | Folger Library's Hamlet |

Defining the Problem

Before we try to tackle the body literature of mankind, shall we take a closer look at the random Hamlet? This example of random probability theory is a problem for which we can describe a model. In Section 7 we will discuss problems and sample space. The sample space for the random Hamlet theory is the text of Hamlet. I have excerpted a small chunk of the sample space taken from the Folger Library general reader’s version of Hamlet. Since carriage returns and capitalization are individually no more complex than any other of the characters, there is no reason to constrain the problem unduly by permitting unformatted text.

The assumption that the laws of probability running amuck for eternity could produce such an event would surely apply to the complete works of Shakespeare in correct chronological order. If that is true, then it is equally probable that the same computer could generate the complete works of Shakespeare with the same spelling errors as William inadvertently introduced into the original folios.

Can probability theory be exercised ad infinitum to imagine machines that could do anything? If the laws of random probability operate in a vacuum without the interaction of other laws: Yes! Otherwise: No!

- Act 1: Scene 1. (Elsinore Castle. The platform of the watch.)

- Enter Bernardo and Francisco, two sentinels (from opposite directions).

- Ber. Who’s there?

- Fran. Nay, answer me. Stand and unfold yourself.

- Ber. Long live the King!

- Fran. Bernardo?

- Ber. He.

- Fran. You come most carefully upon your hour.

- Ber. ‘Tis now struck twelve. Get thee to bed, Francisco. …

Constraints in Reasoning

Constraints limit the problem or the search space. An example constraint is relative frequency. In human language, the relative frequency of letters is measurable. This is true even though letters in English are symbols with no meaning. The relative frequency of words is also measurable, and words are meaningful. It is interesting to note that unless a random character generator has an explicitly non-random constraint to compensate for the relative frequency of characters in text (such as Middle English dramatic text), the likelihood of generating a correct text decreases proportionately to the disparity between frequently and infrequently occurring characters.

Is there a point at which we hit an impassable wall?

The decrease in likelihood may be enough to push a problem beyond the realm of possibility. A clear example is the fact that in the sample from Hamlet, the space character appears almost 50 times and “e” 33 times, while “K” and “k” appear only once each, and characters such as “x” and “z” do not appear at all, despite the random probability that every character in the set would appear roughly four times. In the interest, however, of exploring a more fundamental flaw in the application of random probability theory to extreme examples such as that above, we shall assume that the example string exhibits no characteristics that would automatically interfere with the probability of randomly generating the string. The subject of constraints is very important in modeling knowledge and reasoning processes on computers. Constraints will be revisited in the last three sections of Understanding Context.

The decrease in likelihood may be enough to push a problem beyond the realm of possibility. A clear example is the fact that in the sample from Hamlet, the space character appears almost 50 times and “e” 33 times, while “K” and “k” appear only once each, and characters such as “x” and “z” do not appear at all, despite the random probability that every character in the set would appear roughly four times. In the interest, however, of exploring a more fundamental flaw in the application of random probability theory to extreme examples such as that above, we shall assume that the example string exhibits no characteristics that would automatically interfere with the probability of randomly generating the string. The subject of constraints is very important in modeling knowledge and reasoning processes on computers. Constraints will be revisited in the last three sections of Understanding Context.

Quantifying Improbability

Let’s examine the probability of the dialog above being generated randomly. The problem space includes a character set of 26 Caps, 26 Smalls, 10 numbers, and 18 punctuation and formatting characters = 80. This space is mathematically no more outlandish than 30 or 6000 characters (the number of characters used in Chinese newspapers). The objective is to produce a string identical to that above. The likelihood of randomly generating the first letter is exactly 1 in 80, the second after the first, 1 over 802 or 1 in 6,400. The first line is 1/8054 or roughly 1 in 5.84 times 10 to the 102nd power, and the whole text above 1/80345 or roughly 1 in 1.79 times 10 to the 308th power.

Let’s examine the probability of the dialog above being generated randomly. The problem space includes a character set of 26 Caps, 26 Smalls, 10 numbers, and 18 punctuation and formatting characters = 80. This space is mathematically no more outlandish than 30 or 6000 characters (the number of characters used in Chinese newspapers). The objective is to produce a string identical to that above. The likelihood of randomly generating the first letter is exactly 1 in 80, the second after the first, 1 over 802 or 1 in 6,400. The first line is 1/8054 or roughly 1 in 5.84 times 10 to the 102nd power, and the whole text above 1/80345 or roughly 1 in 1.79 times 10 to the 308th power.

Act 1, Scene 1 comprises a string on the order of 10,000 consecutive characters. The resulting probability is so close to zero that abstract scientific notation is the only possible way of representing it. These probability calculations comprise two dimensional space. The dimensions are the length of the string and the probability of obtaining a match based on the size of the sample. Probability theory generally assumes that the likelihood of each consecutive character being randomly generated decreases exponentially. The definition of random and the algorithms used to generate “quasi-random” sequences are not material to this argument. As soon as we include other alphabets, such as Chinese, in our problem, the sample space becomes impossibly large.

Impossibly large sample spaces could bring our system to its knees so we need to temper our enthusiasm for random factors. Tomorrow, it’s possible I will discuss more about entropy and impossibility.

| Click below to look in each Understanding Context section |

|---|