21 Aug How Do You Think?

Weighing the Options

As part of my quest for the truly intelligent system, I have invested much in investigating and attempting to describe how people think. I am particularly concerned with how people integrate multiple ideas or constraints into their thinking and decision-making processes. More ideas help make better decisions: consider my posts on exformation and hidden agendas. The more constraints we add (subtext for example) however, the more difficult it becomes to arrive at a judgement or decision. This is particularly true when some of the ideas or constraints compete, and competing objectives, paradoxes, and “Catch 22s” are all around us.

As part of my quest for the truly intelligent system, I have invested much in investigating and attempting to describe how people think. I am particularly concerned with how people integrate multiple ideas or constraints into their thinking and decision-making processes. More ideas help make better decisions: consider my posts on exformation and hidden agendas. The more constraints we add (subtext for example) however, the more difficult it becomes to arrive at a judgement or decision. This is particularly true when some of the ideas or constraints compete, and competing objectives, paradoxes, and “Catch 22s” are all around us.

Remember Tevye, the papa in Fiddler on the Roof? He had to repeatedly weigh competing objectives: tradition on the one hand, and the love he had for his daughters, and concern for their future happiness on the other. Each constraint we weigh in this manner may be very complex. I like to think of complex constraints as dimensions in a problem space. Computer systems will need the ability to process multiple dimensional problems before they can reliably deliver actionable knowledge. It is also my firm belief, based on years of study, that natural language understanding is a multi-dimensional problem with at lease six complex dimensions.

| Understanding Context Cross-Reference |

|---|

| Click on these Links to other posts and glossary/bibliography references |

|

|

|

| Prior Post | Next Post |

| Two Rights and a Village | Specialized Instruments: Brain Areas |

| Definitions | More Definitions |

| neuron | information |

| cell | knowledge representation |

| exformation constraints | process ontology |

| knowledge | parallel computers |

Models of Thought

Other factors that makes thinking and decision making more difficult are the absence or ambiguity of information that may be helpful or critical. We are continually required to make decisions based on incomplete or difficult to interpret information. Fortunately, we are often very good at doing this. None of us can see the future, yet we often make predictions based on past observations, current trends, and other information. Parents, politicians, and planners of all stripes continually predict the future and make decisions based on memory of the past, knowledge of the present, and extremely abstract ideas of what may or may not happen down the road.

Can understanding the software (psychology) of thinking help us design new computer software that can think? I think so, but computers are so structurally different than the brain that we’ll have to make major compromises in both the knowledge representation and the process. Compressing the body of human knowledge into ones and zeros, though underway, is not a trivial task. If we assume that we thinkers use multiple complex constraints, or dimensions, then for computers to handle them we need to flatten them out. Even with advanced parallel computers, each parallel thread can only handle two dimensions efficiently. Some of the innovations I will introduce in this blog describe a knowledge representation scheme designed to comprehend the body of human knowledge, and a processing model that can use it without churning forever.

Can understanding the software (psychology) of thinking help us design new computer software that can think? I think so, but computers are so structurally different than the brain that we’ll have to make major compromises in both the knowledge representation and the process. Compressing the body of human knowledge into ones and zeros, though underway, is not a trivial task. If we assume that we thinkers use multiple complex constraints, or dimensions, then for computers to handle them we need to flatten them out. Even with advanced parallel computers, each parallel thread can only handle two dimensions efficiently. Some of the innovations I will introduce in this blog describe a knowledge representation scheme designed to comprehend the body of human knowledge, and a processing model that can use it without churning forever.

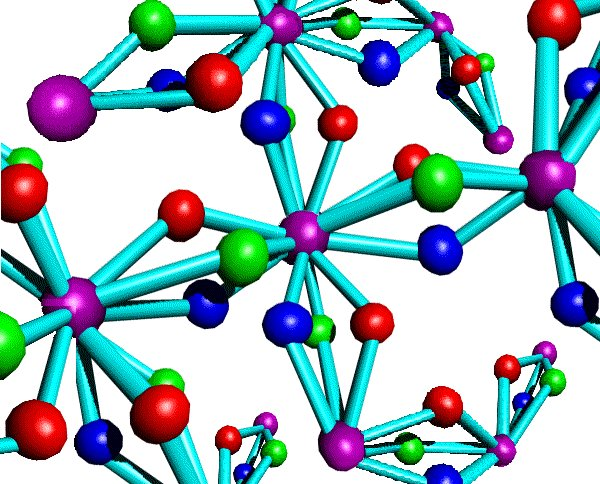

This diagram represents my model of information in an ontology that symbolizes associated facts in a domain space. I do not presume that this is an accurate model of how knowledge is stored in the brain, but rather a model that can efficiently store knowledge and support brain-like processes in automata. An important part of my intent in this blog is to describe the neurological, cognitive, linguistic and computing justifications for this model.

Steven Pinker describes a scientific debate that is at the center of the research I’ve undertaken. On one side of the debate were linguists, including Steven Pinker and Alan Prince, and on the other side, cognitive psychologists David Rumelhart and James McClelland. The microstructure theory proposed by the psychologists said the knowledge and process is built into the massively parallel network of associations. The linguists believed that language is are processed in the brain by rules that manipulate symbols. The conclusion was a compromise in which “the mind has a hybrid architecture that carries out both kinds of computation” (Pinker 1999/2011, Postscript p. 9).

Steven Pinker describes a scientific debate that is at the center of the research I’ve undertaken. On one side of the debate were linguists, including Steven Pinker and Alan Prince, and on the other side, cognitive psychologists David Rumelhart and James McClelland. The microstructure theory proposed by the psychologists said the knowledge and process is built into the massively parallel network of associations. The linguists believed that language is are processed in the brain by rules that manipulate symbols. The conclusion was a compromise in which “the mind has a hybrid architecture that carries out both kinds of computation” (Pinker 1999/2011, Postscript p. 9).

I think the “hybrid” conclusion provides a very good basis for modeling, as long as the mix of rules and neural network fuzzy processes provides good outcomes. My intent in the coming posts and sections, is to explore the meaning of “hybrid”, and explore the structure and processes in the brain that may provide foundations for computational modeling. Much more to come.

| Click below to look in each Understanding Context section |

|---|