16 Jan Roots of Neural Nets

Roots of Neural Nets

The concept of the modern Artificial Neural Systems (ANS) has its roots in the work of psychologists and philosophers as well as computer scientists. As mentioned in prior posts, Aristotelian theories on cognition and logic influenced the development of automata theory and associationism, spawning connectionism or parallel distributed processing (PDP) theory. Connectionism is the basis of much ANS theory, which incubated at the same time as computer science.

The concept of the modern Artificial Neural Systems (ANS) has its roots in the work of psychologists and philosophers as well as computer scientists. As mentioned in prior posts, Aristotelian theories on cognition and logic influenced the development of automata theory and associationism, spawning connectionism or parallel distributed processing (PDP) theory. Connectionism is the basis of much ANS theory, which incubated at the same time as computer science.

Anderson & Rosenfeld describe neural networks with simple processing elements (PEs or nodes): “Neural networks have lots of computing elements connected to lots of other elements. This set of connections is often arranged in a connection matrix. The overall behavior of the system is determined by the structure and strengths of the connections. It is possible to change the connection strengths by various learning algorithms. It is also possible to build in various kinds of dynamics into the responses of the computing elements” (1988, p. xvii).

| Understanding Context Cross-Reference |

|---|

| Click on these Links to other posts and glossary/bibliography references |

|

|

|

| Prior Post | Next Post |

| Perceptrons and Weighted Schemes | |

Definitions |

References |

| Rumelhart 1986 Anderson 1988 | |

| Fukushima 1988 Code Project | |

| Rosenblatt 1958 | |

| von Neumann 1958 |

The PEs Anderson & Rosenfeld describe are simple because they accumulate analog input and, based on the aggregate value, fire or remain at rest. Then the output of each outgoing link is either the product of the input times the weight, or of 1 times the weight for a fired PE and 0 for a resting PE.

A Brief History of Neural Nets (by no means a complete history):

| 1800s | Locke/Associationism | 1890 | Ramon y Cajal/Neuron |

| 1890 | William James | 1910 | Brodmann/Cytoarchitect |

| 1943 | McCulloch and Pitts | 1949 | Hebb/Behavior |

| 1958 | Von Neumann | 1958 | Rosenblatt |

| 1969 | Minsky and Papert | 1972 | Kohonen/Correlation |

| 1976 | Grossberg/Adaptation | 1981 | PDP Group |

| 1982 | Kohonen | 1982 | Hopfield |

| 1983 | Fukushima | … |

Artificial Neural Systems

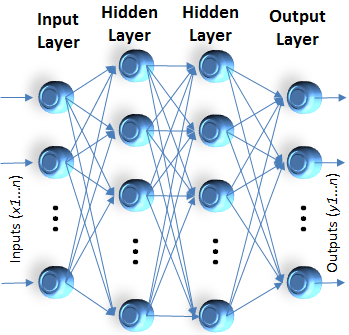

The figure at right shows an ANS with an input layer, an output layer, and two hidden layers. This section begins with an overview of neural network or ANS theory and describes some interesting research and implementations. As neural networks are intended to be brain simulators, they are an excellent point of reference for improving on brain modeling theory. We review some of the fundamental assumptions governing ANS implementation and challenge some ANS assumptions using information presented in earlier Understanding Context Sections.

The figure at right shows an ANS with an input layer, an output layer, and two hidden layers. This section begins with an overview of neural network or ANS theory and describes some interesting research and implementations. As neural networks are intended to be brain simulators, they are an excellent point of reference for improving on brain modeling theory. We review some of the fundamental assumptions governing ANS implementation and challenge some ANS assumptions using information presented in earlier Understanding Context Sections.

Next, we look at symbolic and deterministic models for artificial intelligence applications, describing symbolic knowledge-representation schemes and the methods for applying heuristics to the knowledge to help solve problems. Fuzzy techniques for developing systems that exhibit robust performance even when they are asked to work outside the core of their domain knowledge are introduced, discussed and compared. We also identify application domains that may respond particularly well to use of each of the techniques presented.

Analyzing the ANS Paradigm

Generally, an artificial neural system (ANS) is a computer model of human cognitive processing. It is often implemented in software on uniprocessor computers in which memory registers are used to simulate distributed processing elements. Much of the work of the PDP group referred to throughout this study used networks implemented in software on uniprocessor platforms (Rumelhart & McClelland, et al., 1986).

Some ANS, however, use single chips or computers with many parallel processors. The “Connection Machine” by Thinking Machines, Incorporated is a massively parallel computer architecture that is uniquely suited to neural processing. The name of the company and the product reflect the intent of the designers. The architectural decisions that distinguish parallel architectures for simulation will be considered later.

In some ANS the simple processors are capable only of accumulating a set of input weights and yielding a set of output weights based on the input. Some ANS, however, have augmented the network by incorporating complex processors or gnostic cells to perform higher level tasks than those in the range of simpler models (Fukushima, 1988, p. 67).

Implementations of ANS are often most concerned with these questions:

- Should the processing elements be simple, complex, or a combination of both?

- How many layers or levels should there be in the system?

- How should the layers be partitioned?

- What is the order of significance of different levels of abstraction?

ANS Foundations

ANS Foundations

In 1943 Warren McCulloch and Walter Pitts devised mathematical models of neuron functions; in 1949 D.O. Hebb introduced learning rules. A psychologist named Frank Rosenblatt published a paper in 1958, the same year as von Neumann’s book, in which he described the perceptron: simple processing elements in a feed forward network. As part of his paper, he stated five assumptions about the brain that profoundly influence ANS research, even today. Behind all five assumptions is the notion that neurons are “simple” processing elements. These are Rosenblatt’s assumptions:

- The arrangement of physical links in the nervous system differs from person to person. “At birth, the construction of the most important networks is largely random” (Rosenblatt, 1958, p. 388).

- Over time, neurons, as the result of applied stimuli and the probability of causing responses in other neurons, can undergo some long-lasting changes.

- The changes in (2) result from exposure to a large input sample; similar cells will develop pathways to some cells or cell groups, while dissimilar cells will form links with others.

- Positive reinforcement can facilitate and negative reinforcement can hinder formation of links in progress. Thus, interaction with the environment is the catalyst for network development.

- SIMILARITY in this context is described as a “tendency of similar stimuli to activate the same set of cells” (ibid, p. 389).

The last three of these assumptions appear to be wholly consistent with what is currently known and described in Understanding Context. The first two, however, will be subjects of further scrutiny as we move forward in this Section, and in the Modeling Section, with our examination of computer modeling of brain functions.

| Click below to look in each Understanding Context section |

|---|

| Intro | Context | 1 | Brains | 2 | Neurons | 3 | Neural Networks |

| 4 | Perception and Cognition | 5 | Fuzzy Logic | 6 | Language and Dialog | 7 | Cybernetic Models |

| 8 | Apps and Processes | 9 | The End of Code | Glossary | Bibliography |