24 Feb Neural Network Perception

Feedback in Image Processing

Feedback in Image Processing

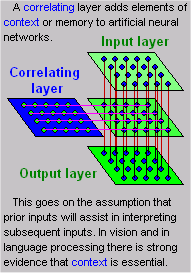

While the flow of electrical impulses in the brain triggered by visual stimuli travels sequentially through layers I then II then III of the visual cortex, this directional flow does not prevent impulses from other sources, including feedback loops within the visual cortex from contributing to our ability to process images. Kunihiko Fukushima, of the NHK Science and Technology Laboratories in Tokyo, proposed a neural network model for imitating the ability of the visual cortex to selectively process the important or meaningful input signals and filter out visual “noise” (Fukushima, 1988).

This model is more complex than many neural networks that preceded his proposal. In his model, each processing layer of the network is paired with a feedback layer. The net impact of this innovation is to add context to the process.

| Understanding Context Cross-Reference |

|---|

| Click on these Links to other posts and glossary/bibliography references |

|

|

|

| Prior Post | Next Post |

| Understanding What we See | Discrimination Association and Recognition |

| Definitions | References |

| neural network modeling | Fukushima 1988 |

| context interpretation | Bibliography refs |

| Short-term memory expectations | McClelland 1981 |

With these additional layers and multi-directional activation flow, Fukushima’s artificial neural networks are better able to interpret visual input patterns than simpler ANS models. This model serves as a basis for building robot vision, including autonomous land vehicles, and also corroborates findings in human vision. In the brain, the feedback from the hippocampus and the correlating areas in the midbrain could serve the roles proposed in Fukushima’s feedback layers. Short-term memory, where immediate context information is stored, can also provide feedback as expectations that can simplify and speed up interpretation.

Audio Networks

Black-and-white vision is a two-dimensional process, as pointed out in the earlier discussion of perception. Hearing is primarily two dimensional as well. The dimensions are frequency (tone) and duration. Just as color adds cues to vision, timbre adds cues to audio signals. The same types of neural networks that are successfully employed in image processing, with very few intrinsic changes, can be used in audio signal processing.

Wernicke’s area (#22) in the brain is where audio signals, including speech, are interpreted. The actual patterns of vibrations that constitute sound, including speech, have several additional characteristics (beyond frequency and duration). Just as the visual cortex has different cell types that specialize in sorting out or responding to different characteristics in patterns of images (orientation, color, movement…), different types of cells in the audio receptive areas respond to volume, tone, timbre, and amplitude.  Given the fact that we can receive and sort out the characteristics of sounds, what must we do to successfully interpret the sounds? How can we recognize the call of a certain bird or the voice of a friend? Once again, the whole brain participates in the process. We rely on our memory to match incoming patterns with those we have heard before. Once we hear a new sound pattern enough times to sink in, we can remember it (or learn it) and recognize that pattern in the future. This discussion on learning will be continued in subsequent posts. But the intent of bringing this up in this context is that neural networks for speech recognition and other audio tasks have been quite successful at performing “brain tasks”.

Given the fact that we can receive and sort out the characteristics of sounds, what must we do to successfully interpret the sounds? How can we recognize the call of a certain bird or the voice of a friend? Once again, the whole brain participates in the process. We rely on our memory to match incoming patterns with those we have heard before. Once we hear a new sound pattern enough times to sink in, we can remember it (or learn it) and recognize that pattern in the future. This discussion on learning will be continued in subsequent posts. But the intent of bringing this up in this context is that neural networks for speech recognition and other audio tasks have been quite successful at performing “brain tasks”.

Tactile Systems

Many robots need to imitate human tactile sensitivity to be able to perform specialized tasks such as carefully grasping and moving fragile objects for assembly. Again, we see a scale of effort required to perform like tasks: a firm grasp is required to lift a rock while a gentle grasp is required to lift an egg. A light or “floating” twist is best to start a bolt in the threads of a nut while a firm twist is best to finish the job. Pressure sensors can be used in robots to identify and gauge requirements on pre-established scales so that, provided the programmer knows the properties of all objects within reach, the robot will be prepared for everything it needs to do.

Many robots need to imitate human tactile sensitivity to be able to perform specialized tasks such as carefully grasping and moving fragile objects for assembly. Again, we see a scale of effort required to perform like tasks: a firm grasp is required to lift a rock while a gentle grasp is required to lift an egg. A light or “floating” twist is best to start a bolt in the threads of a nut while a firm twist is best to finish the job. Pressure sensors can be used in robots to identify and gauge requirements on pre-established scales so that, provided the programmer knows the properties of all objects within reach, the robot will be prepared for everything it needs to do.

But what about MIPUS? He has to lift plastic and china plates, full and empty glasses, napkins, pitchers, silverware, knives, and a host of other objects. What does MIPUS need to know to successfully lift a paper plate with a hot dog, potato salad, and baked beans on it? Baked beans in a light sauce can soak into paper plates, degrading their structural integrity. The tricks we use to carry them involve both manual dexterity and ingenuity. We get the feedback from our sense of touch, but responding to potential food catastrophes can require complex thinking and action.

Neural network perception systems have proven quite successful at perceptual tasks and recognition. In fact, almost any two-dimensional task can be learned by a neural network. Start adding dimensions, though, and the model degrades very quickly. This is a time to have many tools in your tool belt that you can use to solve different types of problems.

| Click below to look in each Understanding Context section |

|---|