22 Feb Understanding What we See

Many Japanese kanji characters (originally imported from China) are little pictures of things in the real world. For example 木 (ki) is a tree. You can see the trunk and the branches. By adding a line at the bottom 本 (hon), you get roots or origin. Put three together 森 (mori) and you have a forest. The symbol for heart 心 (kokoro) looks kinda like a heart, and if you look hard enough, you can see fire in 火 (ka or hi), a river in 川 (kawa) and a mountain in 山 (yama). Whether the symbols used to represent language are imitative, like these Japanese shōkei moji characters, or completely abstract like alphabets, the human brain can do something with the pattern: recognize it then use it to contribute to understanding.

There are specific brain areas or centers, Broca’s area and Wernicke’s area, where language is processed. The signals from visually perceiving these characters go to the language processing areas of the brain after the visual cortex has had a chance to find the features and reassemble them into a known or recognized whole. The whole, and the parts, represent patterns that are recognizable because we have encountered them before and remembered or learned. Things we see that are not written or spoken language, once reassembled in the visual cortex, may go elsewhere for pattern recognition and further processing. The first time we see a thing, we may not recognize it at all, or we may associate it with a similar thing and generalize.

| Understanding Context Cross-Reference |

|---|

| Click on these Links to other posts and glossary/bibliography references |

|

|

|

Patterns of Links

Patterns of Links

There are important connections between the links in the brain and the patterns we perceive. The link structure of neurons in the brain, taken as a whole, appears to be chaotic and relatively random. If we look more closely, however, we see emerging patterns in the brain of functionally specialized areas and nuclei. Since the architecture of the brain can be reduced (to some extent) to patterns of links, it is not difficult to see how perceived things, which are clearly perceived as patterns with some fixed dimensionality, can be assumed to create some sort of localized activation patterns in the brain.

These localized patterns of activation, however, operate within the larger framework of a massively interconnected network of components with diverse functions. Each of the localities in the brain is simultaneously doing something; thus, the aggregate activity in the brain is somewhat different for each perceptual stimulus. This is so even when the input stimuli of two separate events is identical, possibly due to the progress of time and intervening experiences. The aggregate activity of the brain supports metabolism as well as cognition. Digestion is managed by the brain, as are pattern recognition and abstract thinking. This diversity makes the brain a robust mechanism able to respond uniquely to the same or similar input over multiple instances in slightly different contexts.

Aggregate Activity of the Brain

Aggregate Activity of the Brain

Remember how some of the first important things Yorrick learned were about comfort? This, and the instinctive bodily need for survival, are important nuggets of early learning and form a core of knowledge. The brain continually supports our respiratory and digestive systems, keeping the body alive. This metabolic activity is typically not considered part of our consciousness because it is involuntary. The fact that our brain is engaged in these “survival” activities may seem like a loose connection with cognition, but the fact is our emotional state is tied to our physical well-being. Furthermore, our emotions profoundly contribute to our ability to interpret, decide, and perform other cognitive functions. Yorrick intuitively understands what makes him happy and what does not.

Stereo Vision

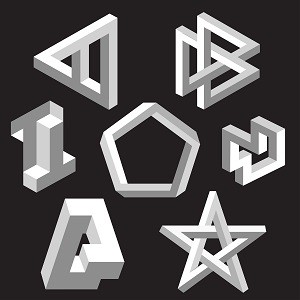

Vision is a relatively two-dimensional process. We are nonetheless able to perceive multiple characteristics in images, including depth, color and motion. The information we are able to draw from incoming visual data exceeds the sum of the two-dimensional impression on our retinas because the strength of our cognitive apparatus makes up for some of the limitations in our visual apparatus. Though you are looking at a two-dimensional screen on a computer, you can infer that this image is intended to portray depth. This ability to infer is critical to cognition.

At this very moment, you are in the process of inverting the image you are seeing so you can interpret it. This process activates the feedback centers in the core of the brain through signals relayed by the lateral geniculate nucleus. This relay process facilitates interpretation by triggering other parts of the brain.

Understanding What We See

Feature-selective cells in the visual cortex respond to parts of the image imposed on the retina. The signals from the feature-selective cells form a pattern of activation in the brain. The pattern is different for different images and the same for like images. It is not really the image we respond to directly; rather, it is the pattern of activation in the cortex that represents the image that triggers recognition.

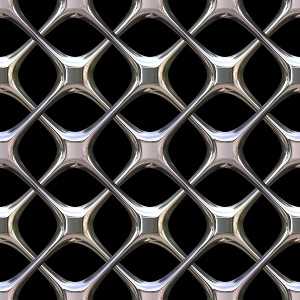

In posts in Section 3 we learned about different principles of feature selectivity and neuron complexity. This selection process is the beginning of sorting out input and activating associated areas in the brain. As we sort out the input from the noise, we must then make inferences about depth and motion. Sometimes images are easier to recognize than other times. Even if you can recognize the image at right at first glance, you can lose it by staring at it too long.

In posts in Section 3 we learned about different principles of feature selectivity and neuron complexity. This selection process is the beginning of sorting out input and activating associated areas in the brain. As we sort out the input from the noise, we must then make inferences about depth and motion. Sometimes images are easier to recognize than other times. Even if you can recognize the image at right at first glance, you can lose it by staring at it too long.

Depth and Motion

Though animals and humans seem to do it instinctively, interpreting depth and motion is not a trivial problem. This has become painfully apparent as psychologists and computer scientists have attempted to design computers that can interpret depth and motion. The amount of depth and motion logic in autonomous land vehicles, for example, is enormous. Successes have been few, slow in coming, and very expensive to build.

Many organisms have a great combination of muscle systems in the eyes and neck that lets them track moving objects. Clues from insects and other organisms with segmented visual systems have contributed to work on the motion problem for neural networks that are not as flexible as living organisms that can move around quickly (sorry MIPUS).

The image characteristics we derive from a set of stimuli may be far more than the sum of the content of the stimuli would suggest. Before an image passes from the eye into the brain, it is converted into a flat or two-dimensional pattern in the retina. The retina responds to light and sends signals back through the relay centers to the visual cortex. In order to determine what is closer and what is more distant in our field of vision, we rely on light levels, shadow, size of objects, context, and our ability to infer or take a closer look when we encounter visual ambiguity (which is probably more ubiquitous than we notice).

The image characteristics we derive from a set of stimuli may be far more than the sum of the content of the stimuli would suggest. Before an image passes from the eye into the brain, it is converted into a flat or two-dimensional pattern in the retina. The retina responds to light and sends signals back through the relay centers to the visual cortex. In order to determine what is closer and what is more distant in our field of vision, we rely on light levels, shadow, size of objects, context, and our ability to infer or take a closer look when we encounter visual ambiguity (which is probably more ubiquitous than we notice).

In other words, we use our whole brain, including its content (our memory of how things are and our expectations), to understand the things we see.

| Click below to look in each Understanding Context section |

|---|