06 Aug Data Convergence at Velocity

Joe Roushar – August 2015

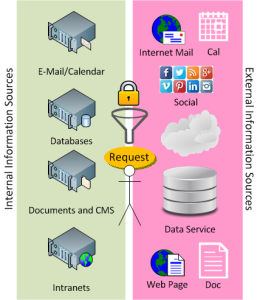

Knowledge workers in all types of organizations need information in internal (to the organization) databases, intranet sites and documents, as well as information in external databases, web pages and documents. The promise of data convergence includes the lofty goal of providing, from a single request, structured and unstructured information from both internal and external sources that can answer complex questions needed by  the worker to accomplish complex tasks, make good decisions, and operate, or compete, more effectively. Naturally, the completeness and clarity of search or other requests will determine the number of results returned and the likelihood of the needed information appearing near the top of the search results. Today I want to talk about converged data searching and requesting in a world where the rising information blizzard is intense and chaotic.

the worker to accomplish complex tasks, make good decisions, and operate, or compete, more effectively. Naturally, the completeness and clarity of search or other requests will determine the number of results returned and the likelihood of the needed information appearing near the top of the search results. Today I want to talk about converged data searching and requesting in a world where the rising information blizzard is intense and chaotic.

Database search is often very limited based on queries that have little ability to search beyond matching or approximating requested strings in a narrowly specified set of columns. Statistical approaches to unstructured search are good for helping find what you need based on words you type in a search engine or feature, but wouldn’t it be better if the computer understood what you need rather than guessing (string matching). For this you need dialog in which smarter computers can help you articulate your needs, then understand the meaning BEFORE trying to find the answer in a database, web site or document. When the answer is not in a list or database, but buried in a document or web page, understanding meaning is, again, the key to finding the right information. We need systems and search tools that understand meaning in context, rather than being limited to hard-coded metadata and superficial skimming.

| Understanding Context Cross-Reference |

|---|

| Click on these Links to other posts and glossary/bibliography references |

|

|

|

| Prior Post | Next Post |

| Thinking in Parallel | High 5s of Intelligent Information Modeling |

| Definitions | References |

| convergence searching SEO | Czarnetcki 2015 Inmon 2015 |

| Big Data subject matter expert open web | McCreary 2014 |

| database UML deep web | Davies 2009 |

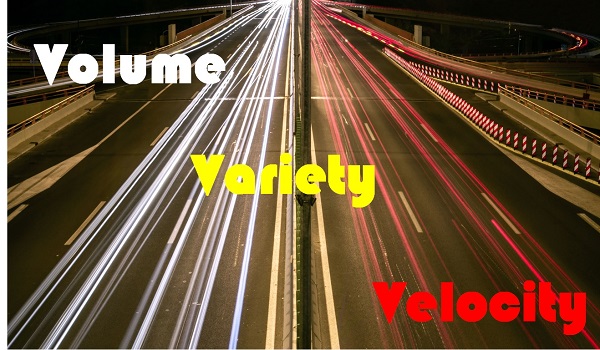

Big Data means different things to different people. For me, there are a few things that differentiate Big Data from older database standards: Big Data can deliver very high performance with very large datasets, often including structured and searchable or queryable unstructured content. We’re not talking about blobs in a relational database that you can only request as part of a query on atomic data in the same rows. Metadata makes it possible to get narrative, video, image, sound or other rich content as part of a request that also delivers structured data results. You can tell it’s not big data if you have to formulate complex joins with primary and foreign keys connecting records in tables.

Big data platforms are also characterized by distributed storage models and often use a BASE transaction model that do not ensure immediate consistency as ACID transactions do. Base stands for Basic Availability, Soft State, Eventual Consistency, and delivers unfailing robust availability and error recovery, even in massively distributed storage architectures. We won’t compare BASE and ACID strategies today, but it is an important differentiator.

From a consumer perspective, searching for needed information on the open web has become much easier and more efficient with the rise of advanced search engines such as Google, Yahoo and Bing (and others such as AltaVista). For businesses, however, that need information from internal data sources, such as databases and content management systems, as well as needing information from partners, the deep dark web and the open web, the ease and efficiency have not yet arrived. But arguably, businesses need to find key information as a matter of competitiveness or survival. Today I’m going to talk about what organizations can do to make finding the information they need much easier.

I recently delivered a webinar for the International Association of Systems Architects (IASA) on two key components of a great strategy for making non-public information more accessible to the organizations that use it. The solution combines semantics and big data using a meta-model. Model-based system engineering began decades ago.

My advisor at the University of Minnesota where I did my Masters Program in computer and information science, was the amazing Dr. James Slagle, erstwhile inventor of the first expert system, as well as blind chess champion of the world. Expert systems, and decision support systems were some of the first software designs based on models.

Truth be told, all programs are based on models. The real questions are:

Truth be told, all programs are based on models. The real questions are:

- “Where is the model?”

- “How easy is it to adapt?” and

- “What skills do you need to adapt it?”

Data models, the relational data definitions that organize the underlying data are the most obvious part of the model, and these are typically fixed and brittle. Packaged software applications have long come with inappropriately named “User Defined Fields”. The name is deceptive because among the people that purchase and use the software, it is often only developers who can effectively adapt these to real business purposes. And too often, there are not enough to adapt to the unique needs of each customer. The rise of the citizen developer, Platform-as-a-Service and iBPM platforms are whittling away at this limitation, but true citizen developer empowerment is slow in coming.

The classic disconnect between programmers and business users is that they have completely different sets of knowledge with little overlap. So the real model is in the head of the subject matter expert, the elicited model is in the head of the analyst and in a set of narrative requirements, and the 2nd translated model is in the head of the software designer and a set of UML or other diagrams. Formal semantic meta-models can be intrinsic parts of programs as in topologies like Be-Informed), or they can be independent objects in information space available to any authorized subscriber as a gateway into systems whose schemata are published in the meta-model. I’ll delve into this approach in future posts.

In a recent post on why semantics are important to businesses I talked about mobility, uptime, secure access, converged data, semantic metadata and, the most important value driver of course: empowering knowledge workers. I began this post talking about getting both structured and unstructured results (converged data) from a single request. This capability is still out of reach of most businesses, but not all.

Data Convergence at Google

“Google extended the map and reduce functions to reliably execute on billions of web pages on hundreds or thousands of low-cost commodity CPUs” (McCreary 2014). This made it possible to lower costs enough to process much more data than was previously cost-effectively possible. Other search engines and organizations leveraged similar approaches to handle their information overloads. “It fostered a growing awareness of the limitations of traditional procedural programming and encouraged others to use functional programming systems” (McCreary 2014). I’m seeing the same phenomenon in multiple companies in the US heartland. More and more organizations are turning to big data to solve complex problems. Today in an architecture review meeting one business division brought in a proposal to add yet another big data system with specific capabilities to meet their unique business needs for upstream data capture, even though the organization has already implemented two other big data platforms.

Much of the activity in big data is in downstream reporting and analytics capabilities. Forrester Research recently published a summary of successful big data implementations in various patterns (EDW, Data Refinery, All-in-one, Hub-and-spoke) across multiple industry verticals. They looked at several platforms including:

- Actian Vectorwise

- Composite Software Server

- Cloudera Hadoop Distribution

- Datameer

- Hortonworks Data Platform

- IBM InfoSphere BigInsights

- LexisNexis HPCC Systems

- MapR M5

- Pentaho

- Teradata Aster

It is a telling snapshot of what is happening with massive data sets, but convergence is absent. Among their key takeaways are:

- big data provides cost-effective and agile capabilities for massive data sets

- Loading the big data store prior to “transformation” (ELT instead of ETL) increases efficiency

- None of their case studies used social or pure unstructured external content, falling short of the promise

The second finding is predicted by the evolution away from code-centered capabilities to data centered capabilities. The smarter our code is, the less we have to torture the data make it not “garbage” as dictated by brittle, legacy code. The next important advancement in  evolution of data strategy will be when you only transform the data when integrations with other systems require it – never for a reporting or dimensional data store. Waiting till the last moment to make the data conform to a specific need implies that your analytics, reporting and presentation capabilities include semantic data access as noted in my recent post on Implementing Semantics.

evolution of data strategy will be when you only transform the data when integrations with other systems require it – never for a reporting or dimensional data store. Waiting till the last moment to make the data conform to a specific need implies that your analytics, reporting and presentation capabilities include semantic data access as noted in my recent post on Implementing Semantics.

Why are we seeing the significant convergence capabilities of the selected big data platforms left out of implementations? I suspect the challenges arise from the lack of semantically centered models and absence of efficient semantic metadata creation, curation and management strategies. I will discuss good strategies for care and feeding of metadata in an upcoming post.

| Click below to look in each Understanding Context section |

|---|

[…] define taxonomies for both process and content models that define your formula for […]