19 Feb Dichotomy vs. Continuum

Consciousness, it appears from our recent discussion, is not an “on or off” proposition. We have subconsciousness, waking consciousness, consciousness during sleep, and so on. Before that we spoke of the yin and yang of perception and learning. Since the subject of this series is cybernetics, we shall diverge from our discussion of consciousness long enough to make some observations directly pertinent to modeling cognition. In most computer systems, binary operations against binary data simplify processing. Chip designers can pack central processing units (CPUs) and memory chips with huge numbers of tiny transistors able to hold two states, representing ones and zeros. The information they process, however, may include an infinite number of combinations of values, few of which are perfect dichotomies. To manage the mismatch, we represent the values in streams of ones and zeros that effectively flatten out the multiple values into logical symbols of sentences, words, letters and numbers, then the computer translates them to ones and zeros.

Consciousness, it appears from our recent discussion, is not an “on or off” proposition. We have subconsciousness, waking consciousness, consciousness during sleep, and so on. Before that we spoke of the yin and yang of perception and learning. Since the subject of this series is cybernetics, we shall diverge from our discussion of consciousness long enough to make some observations directly pertinent to modeling cognition. In most computer systems, binary operations against binary data simplify processing. Chip designers can pack central processing units (CPUs) and memory chips with huge numbers of tiny transistors able to hold two states, representing ones and zeros. The information they process, however, may include an infinite number of combinations of values, few of which are perfect dichotomies. To manage the mismatch, we represent the values in streams of ones and zeros that effectively flatten out the multiple values into logical symbols of sentences, words, letters and numbers, then the computer translates them to ones and zeros.

| Understanding Context Cross-Reference |

|---|

| Click on these Links to other posts and glossary/bibliography references |

|

|

|

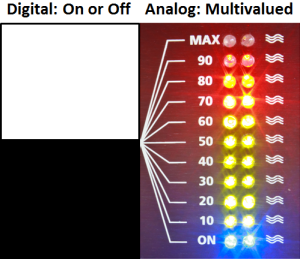

Yin and Yang represent a dichotomy, yet they serve as end points on a continuum. Exclusive dichotomies occur when two things are exactly opposite, and no shades of either thing could be placed somewhere on a continuum between the two things. It may be possible, with sophistry, to show that the real world is full of exclusive dichotomies, or it may be possible to show that no such thing exists. The point is simply that a dichotomy can be represented by 1 binary bit in a computer, whereas a continuum requires more than one bit. A “fuzzy” dichotomy may require much more than a single bit. Hence, the recurring calls for analog computing.

If we were to settle for rigid dichotomies of good and bad, light and dark, hard and soft, we could easily represent them with single bits in digital logic. A continuum, however, requires a much more complex representation scheme: orders of magnitude more complex for each continuum. As humans, we are obviously capable of detecting and distinguishing multiple shades of light and dark, hard and soft, good and bad. But how are these scales represented in the brain? Various levels of excitation and inhibition between individual neurons were discussed in my posts in Section 3 of this blog. In Section 5, we examine how various shades of gray are processed in the psyche. This ability to process shades of meaning is particularly important in language understanding.

Some of the larger discussions in Understanding Context beg the question of dichotomous logic. “The Greeks came up with many enduring metaphysical dichotomies. Substance~form was a biggie. Other still familiar ones are atom~void, stasis~change, chance~necessity, being~becoming, particular~universal, part~whole, discrete~continuous, quantity~quality, body~mind…the list goes on. And today we could add many more. Such as local~global, integrate~differentiate, information~entropy, figure~ground. Even mechanical~organic” (John McCrone: dichotomistic). Mr. McCrone goes on to show 12 ways in which dichotomies may be fuzzier than meets the eye:

- Dichotomies have vague beginnings.

- Dichotomies are dynamically developing not passively existent.

- Dichotomies develop in asymmetric fashion.

- Dichotomies thus depend on scale.

- Dichotomies always develop in both their directions at the same time.

- Dichotomies are separations not breakings.

- Dichotomies involve separation but also the mixing of the separated.

- The middles of dichotomies have scale symmetry.

- Dichotomies have only two poles because three or more is unstable.

- Organic causality is hierarchical or holistic.

- Organic logic is dichotomous with mechanical logic.

- Organicism is only modelling.

Fuzzy logic is about probabilities that are shades of gray or other colors instead of black and white. Dr. Daniel Hammerstrom from Defense Advanced Research Projects Agency (Darpa) “wants chipmakers to build analog processors that can do probabilistic math without forcing transistors into an absolute one-or-zero state, a technique that burns energy. It seems like a new idea — probabilistic computing chips are still years away from commercial use — but it’s not entirely. Analog computers were used in the 1950s, but they were overshadowed by the transistor and the amazing computing capabilities that digital processors pumped out over the past half-century.” (Wired: August 2012). Since digital computers can perform analog tasks with the right programming, there is not necessarily a need for analog chips, but the amount of power they consume is apparently enough less to justify them on ecological grunds.

Fuzzy logic is about probabilities that are shades of gray or other colors instead of black and white. Dr. Daniel Hammerstrom from Defense Advanced Research Projects Agency (Darpa) “wants chipmakers to build analog processors that can do probabilistic math without forcing transistors into an absolute one-or-zero state, a technique that burns energy. It seems like a new idea — probabilistic computing chips are still years away from commercial use — but it’s not entirely. Analog computers were used in the 1950s, but they were overshadowed by the transistor and the amazing computing capabilities that digital processors pumped out over the past half-century.” (Wired: August 2012). Since digital computers can perform analog tasks with the right programming, there is not necessarily a need for analog chips, but the amount of power they consume is apparently enough less to justify them on ecological grunds.

My observations of the human mind, the world, and computing, have lead me to a set of assumptions and computer models that are fundamentally much more fuzzy than hard and fast two-valued logic can represent. Yorrick’s early learning experiences are soft around the edges, and probably result in neural connections that are more vague and asymmetric than later neural connections will become. Let’s continue to follow Yorrick in his acquisition of knowledge and language, and see how you come out thinking.

| Click below to look in each Understanding Context section |

|---|