10 Nov Seeking a Universal Theory of Knowledge

As a fundamental premise for this post, this blog as a whole, and my life’s work, I propose that language and “real world knowledge” are inextricably connected, and neither functions well without the other. This is why, in my opinion, natural language processing (NLP) initiatives focusing exclusively, or even primarily on language structure have significant limitations. Language structure theory is necessary for understanding how language works, but it is most often insufficient for determining a person’s intent when they produce written or spoken language. If the goal is language comprehension approaching human competence, then knowledge-based approaches that have the potential to resolve ambiguity based on meaning in context are essential. Thus I see a need for a universal theory of knowledge that is suitable for supporting knowledge-based language understanding automata.

As a fundamental premise for this post, this blog as a whole, and my life’s work, I propose that language and “real world knowledge” are inextricably connected, and neither functions well without the other. This is why, in my opinion, natural language processing (NLP) initiatives focusing exclusively, or even primarily on language structure have significant limitations. Language structure theory is necessary for understanding how language works, but it is most often insufficient for determining a person’s intent when they produce written or spoken language. If the goal is language comprehension approaching human competence, then knowledge-based approaches that have the potential to resolve ambiguity based on meaning in context are essential. Thus I see a need for a universal theory of knowledge that is suitable for supporting knowledge-based language understanding automata.

| Understanding Context Cross-Reference |

|---|

| Click on these Links to other posts and glossary/bibliography references |

|

|

|

| Prior Post | Next Post |

| Evolution of Language | Context in Backward Chaining Logic |

| Definitions | References |

| knowledge ontology | Robinson 2010 |

| subjectivity comprehension | Søren Kierkegaard |

| context model | Gleiser 1997 |

| expressiveness perspectivism | René Descartes |

Another String Theory?

I posted on the democratization of knowledge a while back. I further suggested that for a computer to be able to engage in a dialog with a human, it needs to have good language skills and large amounts of knowledge. To bring maximum value to the human in this interaction, it should adapt to the human rather than forcing the human to adapt to the computer. This requires systematizing knowledge and making it computable, but does it mean replicating a whole brain, or the entire body of human knowledge?  Before I get into that, let’s look at some great ideas. As is often the case, I find that TED talks bring out the brilliant minds that hold useful answers. Stephen Wolfram has a search capability called Wolfram Alpha. Wolfram searches the computational universe to get useful patterns of knowledge. This is a fabulous approach and a really exciting thing to come to computing, and may be a harbinger of even better things to come.

Before I get into that, let’s look at some great ideas. As is often the case, I find that TED talks bring out the brilliant minds that hold useful answers. Stephen Wolfram has a search capability called Wolfram Alpha. Wolfram searches the computational universe to get useful patterns of knowledge. This is a fabulous approach and a really exciting thing to come to computing, and may be a harbinger of even better things to come.

In cellular automata, as the rules become more complex – and as we sample what exists in the computational universe, we find unexpected and amazing patterns and knowledge that traditional computing approaches miss entirely. This formulaic approach Knowledge-based computing produces knowledge outcomes that are meaningful and useful. Wolfram Alpha, which uses a universal theory of computing, shows that we can capture knowledge and, using human language as the interface, deliver valuable knowledge to users. So how about a universal theory of knowledge?

The Human Side of Knowledge

On Being is a forum on ideas about life and humanity, useful elements when considering the nature of human knowledge. There was a recent interview with Marcelo Gleiser, author of “The Dancing Universe” (Gleiser 1997) in which the author opposes the idea that science and spirituality are irreconcilable. Mystical, philosophical, and scientific ideas about knowledge have been debated through throughout the history of man on Earth.

On Being is a forum on ideas about life and humanity, useful elements when considering the nature of human knowledge. There was a recent interview with Marcelo Gleiser, author of “The Dancing Universe” (Gleiser 1997) in which the author opposes the idea that science and spirituality are irreconcilable. Mystical, philosophical, and scientific ideas about knowledge have been debated through throughout the history of man on Earth.

Many of the ideas of history’s great philosophers, Copernicus, Galileo, Kepler, Newton, and Einstein can help us unlock the secrets of knowledge and the human brain. In the same interview, Krista Tippett and Marilynne Robinson, author of “Absence of Mind” (Robinson 2010), discuss how cold scientific approaches to understanding knowledge may fail to account for the complexity and subjectivity of knowledge. Does a universal theory of knowledge require accommodations for subjectivity and relative knowledge? Even if knowledge is objective, human thought is not. Søren Kierkegaard and René Descartes propose that the subjectivity of thought affects the nature of knowledge as it is available to the mortal man.

Nietzsche’s perspectivism, and brushes with nihilism, may not lead us closer to a universal theory of knowledge, but give us an important key to avoid brittleness: there are fuzzy elements of knowledge and language that we cannot ignore if we want to base algorithms on a universal theory (UT) and use them to successfully understand human intent.

Key Components of a UT

Let us assume for the purpose of this discussion (or at least for the duration of this post) that a universal theory of knowledge can, and should account for both objective facts that exist independent of the human mind, and subjective interpretation that must take shape once knowledge enters the sphere of human thought, possibly deforming the independent facts.

Wolfram’s model is based on objectivity and I think there is much we can profitably borrow from it. I am preparing to propose a model of knowledge that states all facts as being part of a single universal whole, but treats each as having some confidence value within a given context. In other words, as I seek a universal descriptive model for knowledge that may be used for computers, brains or for independent knowledge, I will seek a fundamentally fuzzy model. This will be the basis for a knowledge ontology. The model should be able to be tested against known facts, against subjective opinions, against human thought processes and computational paradigms such that it proves useful in each of these contexts.

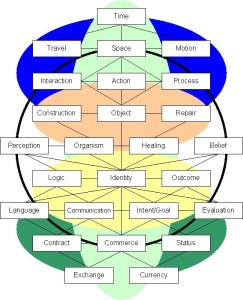

Any knowledge model, or ontology is based on symbols that express the knowledge itself, and shows how the individual elements of knowledge work together to express a reality. The illustration at right shows fundamental knowledge elements at the top, including time, space and motion, and descend toward more transient (and probably less law-abiding) elements at the bottom. Clearly representing and testing the laws that govern interactions between knowledge components are key to validating the model, and to determining its utility in supporting automated processes that seek to mimic human cognitive capabilities.

The final test is: Will the model be able to express any possible knowledge, and if so, is it going to be possible to create a system that can hold the entire body of current and future human knowledge. If so, can that knowledge be marshaled in service of algorithms that not only tap into intelligence, but demonstrate competence in complex brain tasks. I think it’s possible, and I think it’s right around the corner.

| Click below to look in each Understanding Context section |

|---|