20 Oct High 5s of Intelligent Information Modeling

Joe Roushar – October 2015

Quantitative data is easy to make into useful information by establishing correct associations and providing human experts with the right slicing and dicing tools. But in its native format, data is not independently meaningful. Many qualitative content sources are narrative, born as whole information. Such content is advanced beyond data because of the built-in associations, but inevitably more difficult for machines to process because of natural language format with its varied structures and inherent ambiguity. With more business questions requiring both quantitative and qualitative sources to get complete answers, organizations need reliable ways to bind quantitative and qualitative sources together in advanced BI, reports, searches and analytic dashboards. As the speed of business accelerates, the time it takes to manually correlate disparate sources with mutually reinforcing content to support better insights is becoming an unsustainable luxury, and automated tools to build actionable knowledge are becoming a competitive necessity.

In today’s post, I propose ways to use intelligent information modeling to accelerate the delivery of actionable knowledge at all levels of an enterprise. This will set the stage for a discussion of how to automate such an ambitious transformation.

| Understanding Context Cross-Reference |

|---|

| Click on these Links to other posts and glossary/bibliography references |

|

|

|

| Prior Post | Next Post |

| Data Convergence at Velocity | Enterprise Metamodel Governance |

| Definitions | References |

| canonical model search | Domingos 2015 Inmon 2015 |

| meaningful associations | Aristotle Zechmeister 1982 |

| Big Data query SPARQL | McCreary 2014 Glass 2015 |

| database convergence | Sowa 1984 Schank 1986 |

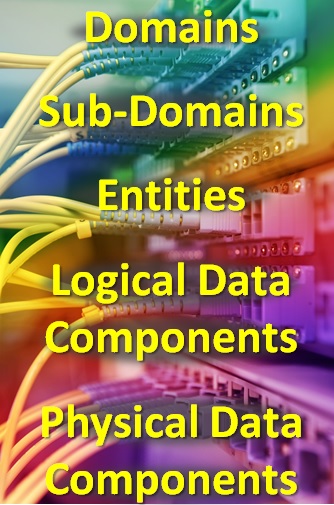

Let’s begin with a taxonomy of the meaningful elements that define enterprise information: a canonical model. A canonical model can be as simple as a table showing the systems of record (SOR) for each core category of information, and as complex as a hierarchically linked set of concept networks in an ontology describing the concepts that make up the business, and the contexts in which they are understood. A useful way of organizing the taxonomy of meaningful elements in an enterprise looks like this:

Let’s begin with a taxonomy of the meaningful elements that define enterprise information: a canonical model. A canonical model can be as simple as a table showing the systems of record (SOR) for each core category of information, and as complex as a hierarchically linked set of concept networks in an ontology describing the concepts that make up the business, and the contexts in which they are understood. A useful way of organizing the taxonomy of meaningful elements in an enterprise looks like this:

- Domains

- Such as [health insurance]

- Sub-Domains

- Such as [benefits] and [claims]

- Entities

- Such as [benefit definition] and [filed claim]

- Logical Data Components

- Such as [name of benefit] and [date of claim]

- Physical Data Components

- Such as [benefit_name] = “inpatient surgery”

- and [claim_filed_date] = “10/16/2015”

Note that the only level for which I have shown actual values is at the lowest level. Traditional software systems usually know nothing and care nothing about anything above the physical component level. Queries needed to get the information for system users often contain joins that realize the next higher, or “parent” level of concept. But all the information, even if it embodies taxonomically related concepts (such as an order, an order_detail_line, and an order_detail_line_total_cost) dwells entirely within the lowest level of our enterprise information model taxonomy.

I’ve frequently suggested that the universe we know can be organized hierarchically. This hierarchy sometimes divides cleanly, including dichotomies like biotic (with the taxonomy of living things) vs. abiotic, and particles vs. forces. Sometimes, however, the relationships and inherited characteristics are fuzzier and more difficult to assemble into nice pyramids.

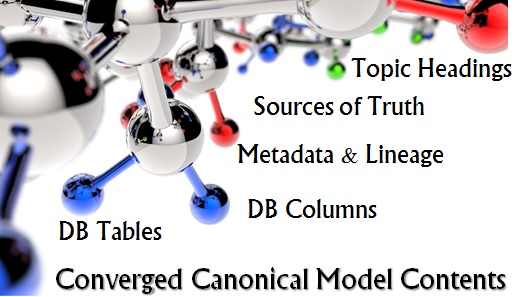

To be effective, the canonical information model and glossary should be hierarchical to at least 3 to 4 levels of depth and cover:

- All semantically meaningful table names of every core and many peripheral databases

- Significant, semantically meaningful column names of every core database

- Significant, semantically meaningful section or clause topic of unstructured data

- Source of establishment and source of truth maps for every level 1 and 2 concept

- Source of metadata and lineage for every auditable element of the information model

Getting this information for structured data is often easier and more straightforward for IT people because we have long separated the data from the process in two, three and more tiers of our information systems architectures. Documents, however, do not fit nicely into a tiered model. I’ll post more about good approaches for architecting and curating unstructured content in the enterprise.

But, manually developing and managing this is labor intensive and costly. Thus, automated tools are needed to:

- Build the model

- Start with a top level model agreed upon by key stakeholders then test it and expand it with machine learning

- Empower information stewards in each domain with supervised machine learning tools to build and maintain domain models

- Support advanced, semantic search across structured and unstructured sources

- This may mean putting a big data repository in the middle and using NoSQL or SPARQL with a set of prefab semantic services; or

- Create a semantic layer and direct queries and search requests to the semantic agent to figure out where to find the information you need

- Support queries simultaneously touching relational databases and big data sources

- Again, this could mean pumping all the important information into big data sources or putting semantic agents in a new layer to access existing information

- Deliver metadata and lineage for every auditable element of the information model

- This can be the expensive part if you need to retrofit instrumentation into all your systems; or

- create listeners and give them access to the UIs, the back-end databases or logs to capture and categorize all new activity

- Enforce source of establishment and source of truth designations

- Use data governance processes to master your systems and data (I’ll post more about this later).

Creating an enterprise canonical information model is essential to maximize the following Information Architecture outcomes:

Creating an enterprise canonical information model is essential to maximize the following Information Architecture outcomes:

- Information Asset and lifecycle Management

- Common Data Definition and Vocabulary

- Master Data Management

- Data Quality Profiling

- Value and Risk Classification

While it is possible to deliver all of these without beginning with a canonical model, using the model as a starting point, and using each of these outputs to reinforce the Enterprise Information Management strategy will produce amazing long-term benefits. Here’s a breakdown of what each of these looks like:

Output: Information Asset and Lifecycle Management

Output: Information Asset and Lifecycle Management

Value: Information is a business asset, similar to other balance sheet assets, and if managed and leveraged by the business to maximize its value and minimize its cost throughout its lifecycle, will grow in value over time.

Actions:

Begin with a canonical model of the enterprise showing all core domains, and canonical models of each core domain.

Consider Information management as more than a technical task but a fundamental business responsibility.

Engage business stakeholders as owners and IT stewards to help define the characteristics and requirements for how the business data should be managed (stored, accessed, secured, defined, organized and shared).

Implement a comprehensive information life cycle management strategy to manage the flow of data and associated metadata from the creation and initial storage to the time it becomes obsolete and archived, then destroyed.

Ongoing Behaviors:

- Organize new categories of information to align with business organizations and canonical domain classifications.

- Separate ownership versus stewardship of information asset management.

- Business stakeholders (owners) should be responsible for management policies and processes, IT should be responsible for executing and maintaining compliance to those policies and processes (stewards).

- Plan and govern the uses of data to maximize business efficiency by assigning risk/cost/benefit values to the information asset at the entity level.

- Keep up to date records management and retention processes/policies and infrastructure

- Make data governance a recurring process for ensuring adherence to the guidelines defined around each sub-domain of data that requires lifecycle management

- Information lifecycle management can use automated processes to ensure compliance with retention regulations.

Output: Common Data Definition and Vocabulary Glossary

Output: Common Data Definition and Vocabulary Glossary

Value: Implementing a single unified and high-level semantic business data model that organizes all types of information and their relationships to each other across the business will shorten capability development and deployment times.

Actions:

Build and maintain a common taxonomy of business data types across the enterprise as a critical foundation of a consistent enterprise information architecture.

Use machine learning, where possible, to build and maintain the glossary.

Normalize the understanding of each entity (and possibly logical data component) to account for differing perspectives of each stakeholder community.

Where a common understanding for a term cannot be achieved, create, define and publish a new term along with its synonyms in different business domains.

Ongoing Behaviors:

- Reduce fragmented and inconsistent vocabulary that creates significant data quality problems that impact operational efficiencies.

- Bring interested communities together regularly to update common definition and vocabulary in a preemptive way to address data quality issues.

- Use the glossary to ease the integration of new applications and trading partners into the existing business information portfolio.

- Use the glossary and synonymy model to adopt industry data model standards into the common enterprise data taxonomy.

- Gradually create definitions and vocabulary for key elements that are shared and integrated.

- Apply the machine learning techniques to glossary maintenance to improve the semantic rigor.

Output: Master Data Management (MDM)

Output: Master Data Management (MDM)

Value: Giving all business data an authoritative source ensures that you can get the same answer to the same question no matter what channel or app you use, and increases agility when changes are needed.

Actions:

Evaluate all sources of each data entity and component for quality/integrity issues.

For each sub-domain and entity, designate one source of truth to control data quality when there is even a hint of information disparity.

Reduce system complexity and overall cost, while increasing agility by mastering the data using the canonical model with definitions from the glossary.

Ongoing Behaviors:

- Incorporate knowledge of exactly what system is responsible for owning the “true” value of each entity in every IT project.

- Architect in MDM at the beginning of each new project, as it is much more costly to retrofit existing systems.

- Use MDM as a location to get a fully defined data model for each sub-domain (e.g. the customer data model in the Customer Data Service).

- Go to a service layer for MDM to enable faster and more reliable system integrations than point-to-point integrations.

Output: Data Quality Profiling

Output: Data Quality Profiling

Value: Ensuring that the quality of the enterprise data is commensurate with its value and its risk reduces downstream costs of inaccuracies and miscues that could impede competitiveness and compliance.

Actions:

Incorporate good data profiling tools and put them in the hands of qualified profilers.

Measure data quality by profiling completeness, accuracy, timeliness and accessibility of the data.

Whenever stale or incomplete data is identified, either through profiling or by spotters in business or IT, triage and correct it with alacrity.

Reduce business risk and inefficiency by streamlining and quarantining or flagging information whose quality cannot realistically be ensured.

Ongoing Behaviors:

- Aggressively correct processes that generate records with missing elements.

- Address data gaps by completing records or streamlining data models by removing or segregating problem components.

- Establish freshness standards for information in each system, describing at what time interval it should be considered up-to-date within the margin of error as defined by the standard (e.g. updated every 24 hours).

- Don’t allow recurring data errors to become systematic.

- Use validation procedures to check all new record entries and updates for accuracy.

- Ensure data is understandable by its consumers (human users or automated systems) and reconsider storing raw data formats that consumers cannot read.

Output: Value and Risk Classification

Output: Value and Risk Classification

Value: A classification of an information asset’s value and risk will provide the best foundation for its enhancement, management and governance.

Actions:

Develop and socialize fundamental understanding of the value of each information asset to the business, and the business risk if is exposed, corrupted or lost.

Consider the value and risk classifications essential to determining the appropriate strategies and investment levels for protecting and managing each sub-domain, entity and key logical data component.

Ongoing Behaviors:

- Prioritize and implement information management processes and security safeguards commensurate with value and risk classifications.

- Establish a comprehensive business risk management program based on an understanding of the value and risk of the key information assets.

Yes, I know – I’ve given you way too much to do in one budget cycle. You can begin, however, by creating a valid enterprise canonical model, then cherry-picking the actions and ongoing behaviors that will bring the most value in your most important business areas.

The solution to the high cost of doing it all manually:

Provide intelligent information mapping with semantic machine learning to:

- Scan databases and documents

- Build and update the enterprise canonical model

- Create shared semantic layer services to:

- Automatically determine where to find answers by concept

- Automatically generate metadata for search and query

- Automatically determine where to place documents by content

- Track information lineage across processes

- Make processes models much more intelligent

I’ll make each of these processes more clear in upcoming posts.

| Click below to look in each Understanding Context section |

|---|

| 1 | Context | 2 | Brains | 3 | Neurons | 4 | Neural Networks |

| 5 | Perception and Cognition | 6 | Fuzzy Logic | 7 | Language and Dialog | 8 | Cybernetic Models |

| 9 | Apps and Processes | 10 | The End of Code | 11 | Glossary | 12 | Bibliography |

[…] changes: even those that are mostly evolutionary, but some revolutionary? It’s time for Enterprise Information Management (EIM) to grow up and assume its rightful role at the center of competitive advantage. EIM refers to […]

[…] content model taxonomy begins with an enterprise canonical information model with domains, categories and concepts. Every database and some documents should map to a category […]

[…] changes: even those that are mostly evolutionary, but some revolutionary? It’s time for Enterprise Information Management (EIM) to grow up and assume its rightful role at the center of competitive advantage. EIM refers to […]

[…] changes: even those that are mostly evolutionary, but some revolutionary? It’s time for Enterprise Information Management (EIM) to grow up and assume its rightful role at the center of competitive advantage. EIM refers to […]

[…] High 5s of Intelligent Information Modeling (Specific capabilities for the automation…) […]