29 Jan Will Computers Ever Understand Me?

By: Joe Roushar – January 2020

I think I understand

Ideas of devices becoming sentient need not be frightening. Thinking and being seem to be tightly bound: Je pense, donc je suis (Descartes 1628). Some have riffed on René’s theme to suggest the idea that sentience is defined by symbolic thought and expression (language), but the more we know about non-human species with advanced cognitive capabilities, the less distinction we see in our unique ability to speak. There may be an intermediate step between thinking and understanding: comprehending. This post focuses on human language dialog and computational efforts to replicate comprehension to the extent that digital software can interpret the intent of complex human speech and writing.

Ideas of devices becoming sentient need not be frightening. Thinking and being seem to be tightly bound: Je pense, donc je suis (Descartes 1628). Some have riffed on René’s theme to suggest the idea that sentience is defined by symbolic thought and expression (language), but the more we know about non-human species with advanced cognitive capabilities, the less distinction we see in our unique ability to speak. There may be an intermediate step between thinking and understanding: comprehending. This post focuses on human language dialog and computational efforts to replicate comprehension to the extent that digital software can interpret the intent of complex human speech and writing.

Understanding does not require two sentient beings communicating using language: a single being can develop, independent of a symbolic framework, the mental capacity to interpret phenomena and act appropriately based on observed causes and effects. Humans without hearing or speech do it all the time. Therefor, language is not needed for understanding. Language is simply a convenient vehicle for sharing knowledge and fostering mutual understanding. Because we now live in a time, place and stage of evolution where there are many sentient beings interacting, shared understanding is both indispensable and inescapable. While I shall not try to define or redefine res cogitans, I think a frank discussion of understanding and computers is timely as Artificial Intelligence (AI) is surging toward the mantle of understanding.

As understanding and consciousness do not depend on language (Koch 2019), those of us endowed with the gift of speech must step outside ourselves and acknowledge the primacy of all the things in the universe that can be understood, and admit that language is a small, weak and ambiguous symbolic wrapper that attempts to describe physical things and abstract concepts concepts larger than anything: concepts that often defy description.

Understanding Context Cross-Reference: Links to other posts and glossary/bibliography references

|

|

|

| Prior Post | Next Post | |

| Intent Models for Competitive Advantage | Knowledge is the New Foundation for Success | |

| Definitions | References | |

| Superintelligence ambiguity semiotics | Sciforce 2019 Allen 1987 | |

| meaning context taxonomy intent | Jackendoff Lamb 1966 Nirenburg | 1987 |

| empathy fuzzy logic Machine Learning | Domingos 2015 NL References |

Humans learn language from infancy, and develop the ability to “understand” complex symbolic strings of spoken and written words. That understanding is often linked to gestures, including facial expressions. I propose that language understanding is laden with ambiguity on multiple levels, most of which is outside the abilities of “conversational bots”, search engines and personal digital assistants and mobile voice activation systems. What can “understanding” possibly include?

- correctly interpreting the intended meaning of a word or gesture

- correctly interpreting the meaning of a phrase

- correctly interpreting the meaning of a simple sentence

- correctly interpreting the meaning of a complex sentence

- correctly interpreting the meaning and intent of a phrase (sometimes opposites)

- correctly interpreting the meaning and intent of a complex sentence

- correctly interpreting the meaning and intent of a complex sentence and gestures

- correctly interpreting multiple meanings and intent of a phrase, gesture or sentence

Human children, often from a young age can understand language all the way to level eight. I know of pre-teens who take delight in reading Shakespeare and untangling the multiple meanings. Sadly, I know of no system that can approach human competency in achieving level one of Natural Language Understanding (NLU). There are many excellent tools for Natural Language Processing (NLP – not the same as understanding), some of which I use frequently. But even though Siri, Alexa and other speech-wielding automata bandy words, and often respond to requests appropriately, the idea that they “understand” what is said is somewhere between dubious and ludicrous. I would be delighted to be proven wrong on this, but I’m not holding my breath. Why care? Because most Artificial Intelligence (AI) capabilities revolve around accurate models of “intent” and “meaning” and a pinnacle of artificial intelligence, accurate, automated, multi-lingual, multi-directional translation requires at least level seven understanding.

Decomposing Understanding

The creation of speech or writing doesn’t guarantee understanding. Understanding is based on an informal agreement between the speaker/writer and the hearer/reader that the symbols in the shared language have specific definitions, and that specific grammatical patterns of phrases and sentences used to express intent and fact enable mutual empathetic collaboration. Even within a single language, different groups divided by age and gender interpret the same words and phrases differently. But as wisdom increases, people become better able to bridge those gaps in understanding. Computers, on the other hand, have never exhibited the ability to understand human intent nor to empathize. Thus, NLU in computers has not yet been achieved at any significant scale. Arguably, we can use the pattern matching techniques of NLP to decompose words, phrases and sentences to extract and classify various attributes of the utterance or written text: taxonomy (physical vs. abstract), meronomy, causality, objective or goal, time, space, identity and preference, society and interaction pattern. But even doing all these, which no existing software does coherently, will not be enough to capture the richness of human intent. To do that, we will need to add fuzzy approaches to interpret subtext and exformation and invent digital solutions that can process emotional responses.

Being a good or “active” listener is often necessary to cross the boundary between hearing and understanding, especially when we want to comprehend the true intent of the writer or speaker, and go beyond that to understand why they have a certain belief. Active listening involves far more of the brain (and body) than passive listening or just hearing. Interestingly, lawyer David Boies‘ ability to listen and catch all he hears has helped him achieve international prominence in his field (Gladwell 2013) by winning important cases. I use the ambiguous word “catch” because I intend it to embody a lot of complex processing. Cognitive investment is needed to return empathy, and humans who are good at it tend to be considered of high “emotional intelligence”. Sadly, computers are fundamentally not good listeners – at least not today. We’re working to change that, and imbue in computers the ability to go beyond sentiment analysis to sentimentality and empathy.

Being a good or “active” listener is often necessary to cross the boundary between hearing and understanding, especially when we want to comprehend the true intent of the writer or speaker, and go beyond that to understand why they have a certain belief. Active listening involves far more of the brain (and body) than passive listening or just hearing. Interestingly, lawyer David Boies‘ ability to listen and catch all he hears has helped him achieve international prominence in his field (Gladwell 2013) by winning important cases. I use the ambiguous word “catch” because I intend it to embody a lot of complex processing. Cognitive investment is needed to return empathy, and humans who are good at it tend to be considered of high “emotional intelligence”. Sadly, computers are fundamentally not good listeners – at least not today. We’re working to change that, and imbue in computers the ability to go beyond sentiment analysis to sentimentality and empathy.

Do we want computers to understand us?

Privacy advocates’ objections notwithstanding, I think there is a need for fully automated NLU with human level competency. The most compelling case, from my perspective, is in using AI to improve transportation, safety, business, the environment, health, government and commerce – thereby improving people’s standard of living. As noted above, models of intent and other meaning models such as causality, meronomy, sequence, time and space are critical pillars of AI and NLU. There are systems that “know” about each of these aspects of knowledge, but no single system brings them all together to fully understand the meaning of your words. Again – do we want computers that are that smart? Might they turn against us and take over the world. While it would be difficult to say that it will not happen, there are thought leaders in this area that have proposed strategies to reduce the possibility of tragedy (Nick Bostrom 2014).

Which comes first – NLP or NLU?

I read a a very good, and mostly correct article comparing NLP and NLU. In it the authors (Sciforce) stated that “it’s best to view NLU as a first step towards achieving NLP: before a machine can process a language, it must first be understood.” I find this assertion remarkable in that many experts who understand the complexities of natural language would assert that NLP has been taking place all over the world for decades, since the 1950s according to the article, and true NLU beyond the simplest of sentences has not yet been achieved to a usable level anywhere.

Keyword matching algorithms and “conversational” bots of today are better than the search engines and Interactive Voice Response systems from which most evolved, and they deliver tremendous value. But human language is inherently ambiguous, and no commercially available system can effectively resolve ambiguity and carry on free dialog with humans. There is no commercial system or approach capable of understanding the complex meanings of enough human expressions to go head to head with a person for more than a few sentences. I remember my fascination with Eliza – the Rogerian Psychotherapist bot that reflected simple expressions to give the impression of a good listener. But frankly, it’s a toy that understands nothing. NLU does not precede, but follows NLP, and ours uses processes that are not yet in the existing NLP nor NLU arsenals.

Keyword matching algorithms and “conversational” bots of today are better than the search engines and Interactive Voice Response systems from which most evolved, and they deliver tremendous value. But human language is inherently ambiguous, and no commercially available system can effectively resolve ambiguity and carry on free dialog with humans. There is no commercial system or approach capable of understanding the complex meanings of enough human expressions to go head to head with a person for more than a few sentences. I remember my fascination with Eliza – the Rogerian Psychotherapist bot that reflected simple expressions to give the impression of a good listener. But frankly, it’s a toy that understands nothing. NLU does not precede, but follows NLP, and ours uses processes that are not yet in the existing NLP nor NLU arsenals.

What is missing?

Knowing that language is frequently ambiguous, humans must often ask clarifying questions to determine the intent of a speaker, so it stands to reason that a system must be able to do the same if the goal is human level competency. Many conversational bots have “skills” that include asking canned or pre-defined questions to gather information needed to comply with a request, but the skills are usually hard-coded as processes for filling in predicted blanks rather than resolving ambiguity. The nature of ambiguity may be more complex than meets the eye. Poetry is a good example of intentional ambiguity as it may contain hidden meanings and layers of meaning. Anaphora, metaphorical expressions, deception, double entendre, and other commonly occurring phenomena in language require not only the ability to ask clarifying questions, but to infer multiple possible meanings, and sometimes accept that more than one meaning is intended. This implies a fuzzy approach that can deliver two or three prioritized answers. In addition to the fuzzy logic needed to deliver more than one interpretation, this implies the need for knowledge more than information.

Existing search and query results are information NOT knowledge:

- Information is connected data that people need to analyze

- Knowledge actionable information

No matter how much machine learning is applied to strictly statistical approaches to natural language understanding, they will never yield the level of accuracy possible with a robust holistic “meaning” based approach that begins with a general knowledge ontology that embodies taxonomy, causality, meronomy, semantic primitives, time and space knowledge.

Quality Metrics for NLU

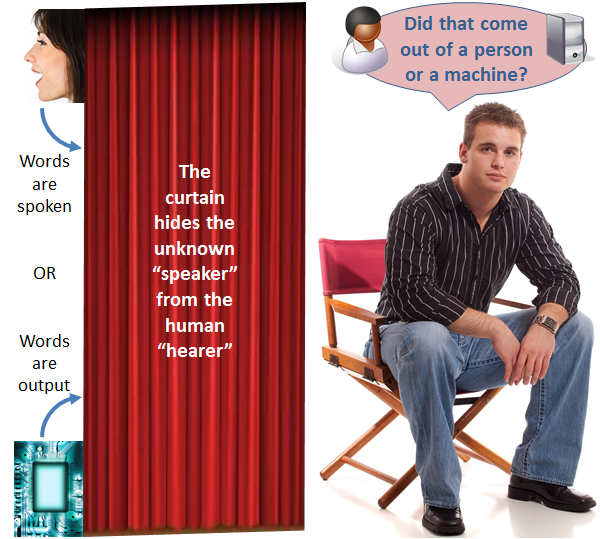

Practitioners often consider the Turing Test a simple but naive mechanism to determine if the system is approaching human competency in language understanding. As social interaction is a key point in natural language communication, the social simulation is somehow appealing. Qouting a prior post: The Turing Test (Turing, 1936) is a discriminator that uses a human judge to test the “intelligence” of a device. Basically, the Turing Test is just a blind product test similar to those done in laundry-detergent commercials. Instead of stained pairs of pants, however, the judge observes some form of output and tries to guess if a person in the loop produced the output or if it was done automatically. My depiction below uses words, natural language output, as the subject of the test.

Since humans can be deceived by ingenious devices, the Turing Test is hardly foolproof. Indeed, under the strictest interpretation of intelligence, a machine capable of learning as rapidly and fully as a person learns is difficult for most people to imagine. In this blog, I want to make it not only imaginable, but also foreseeable (the creative part will have to wait for my future blog). By focusing on advanced sensory processing and information storage and retrieval as the processes to imitate, even interpreting language by computer becomes possible.

In the Turing test, a person (the judge) at a terminal in one room that is hooked to a computer in another room types in questions at his terminal. Either the computer answers, or a person intervenes to provide the answer. A machine is determined to exhibit intelligence if the judge guesses wrong, thinking the computer answer was provided by a human, or the judge is unable to say one way or the other. There are many more questions to be considered in evaluating a system, including what makes the machine or its program intelligent and, perhaps most important, how complex was the problem: simple enough for a clever canned response, or complex enough that the interpretation resembles human level understanding. Again, a fuzzy knowledge based solution is the best way to pass the test.

Bi-Directional Translation

Another popular colloquial way to evaluate natural language understanding is the bi-directional translation test. Put a moderately complex sentence into a translator and translate it from the “source” into the “target” language of your choice, then put the translation into the same translator and reverse the source and target language, and see how close reverse translation comes to the original source input. This can actually be a fun party game because the results can be quite amusing as are the games that see who can get a conversational bot on their phone to give the most hilarious wrong answer.

The goal of systems designers should be to take the fun out of it by delivering high quality answers to arbitrarily complex questions, and forward and reverse translations that match the intent of the source input.

Here are some definitions that are directly related to understanding:

The goals of fully automated NLU and intelligent dialog are elusive. I have been sometimes told that what we are seeking to do can’t be done, and if it could, companies that have been investing zillions of dollars in NLU for years would get there before us. I think I understand why, despite the efforts and treasure expended, the nut has not yet been cracked. The dodecahedron I use to represent the sections of this blog are intended to reflect the multi-disciplinary facets of this R&D. Widely accepted rules that cripple the tech giants are no longer valid. I often feel like David up against a Goliath or a squad of Goliaths. But David’s defeat of the overgrown Philistine was probably a foregone conclusion (Gladwell 2013) because David refused to follow the rules. David looked past the obvious difficulties and decided that he knew something important that all the others missed. I feel the same – and I’m on the move with my smooth stone and sling in hand

The goals of fully automated NLU and intelligent dialog are elusive. I have been sometimes told that what we are seeking to do can’t be done, and if it could, companies that have been investing zillions of dollars in NLU for years would get there before us. I think I understand why, despite the efforts and treasure expended, the nut has not yet been cracked. The dodecahedron I use to represent the sections of this blog are intended to reflect the multi-disciplinary facets of this R&D. Widely accepted rules that cripple the tech giants are no longer valid. I often feel like David up against a Goliath or a squad of Goliaths. But David’s defeat of the overgrown Philistine was probably a foregone conclusion (Gladwell 2013) because David refused to follow the rules. David looked past the obvious difficulties and decided that he knew something important that all the others missed. I feel the same – and I’m on the move with my smooth stone and sling in hand

| Click below to look in each Understanding Context section |

|---|